Storage is perhaps one of the thorniest issues in computing, whether you are looking at an enterprise datacenter or an HPC environment. A storage system has to have enough capacity and enough performance to serve the needs of applications and users, with the extra complication that different workloads have differing requirements as far as performance goes.

For enterprises, this need has typically been met through a SAN, connecting clusters of servers to one or more external storage arrays through a high performance data fabric, with the storage arrays delivering features such as quality of service, replication and snapshots.

More recently, software-defined storage solutions have been promoted as a more scalable and cost-effective alternative by filling a cluster of industry-standard server boxes with storage and using a software layer such as VMware vSAN or Red Hat Gluster to create a shared storage pool from those resources.

However, software-defined storage has its own drawbacks, not least of which is that it uses up valuable CPU cycles if you are running applications on the same server cluster that is serving up the storage, as with hyperconverged infrastructure (HCI). This load can account for up to 25 percent of the CPU’s time, according to some estimates.

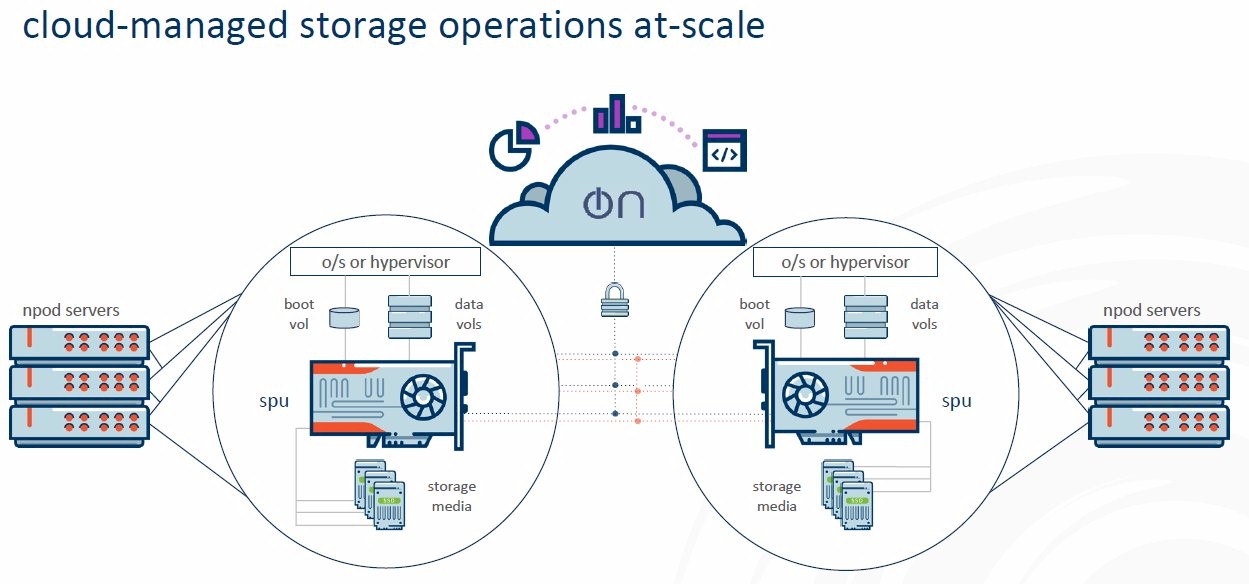

One company that is exploring an alternative to both a SAN and software-defined storage is Nebulon, a startup founded in 2018 which recently came out of stealth mode with a platform it calls Cloud-Defined Storage, as it makes use of a cloud-hosted control plane to configure and manage the storage hardware. This enables an IT administrator to manage thousands of servers across multiple sites, while reducing costs and the amount of time IT staff spend on operations, according to the firm.

Driving down storage cost and complexity for enterprise was one of Nebulon’s aims, according to chief executive Siamak Nazari, who like several others at Nebulon was formerly a top executive at 3PAR before that storage firm was acquired by Hewlett Packard Enterprise. He recounts a conversation he once had with a CIO.

“I’m buying storage arrays, they are expensive, there are multiple tiers that I have to set up, yet I’m also buying all these servers and every single one of these servers that I buy has this slot right in front of them,” the CIO reportedly asked. “Why do you make me buy these additional expensive services and equipment? Why can’t they just use this sea of storage slots that I already have in my servers?”

The answer, of course, has already been noted: using the built-in storage of the servers typically requires an extra software layer, and according to Nazari this might interact with the operating system in unforeseen ways and cause compatibility issues, or even affect the performance of key applications.

“These folks have tried software defined storage or hyperconverged, but because of service level agreements or workload restrictions that come with software defined storage, they’re going back to external storage arrays,” he claims.

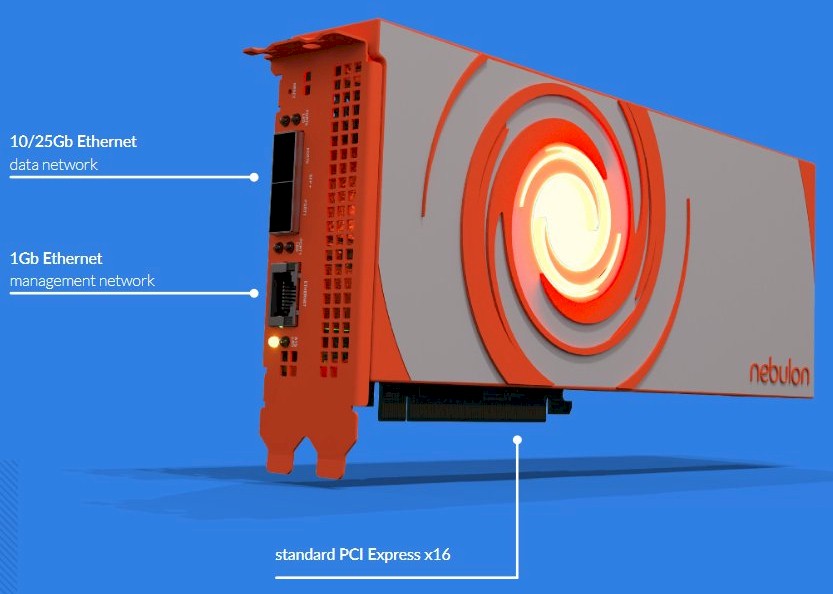

Nebulon’s architecture can be regarded as a kind of mash-up of a traditional SAN and software-defined storage, borrowing some aspects of both. It uses a PCIe adapter card inside each server node to control the storage drives, just as you might traditionally install a RAID controller, which is dubbed a services processing unit (SPU) by Nebulon. Each SPU runs the data services and because it controls the storage drives directly, is likened by the firm to having a miniaturised storage array controller inside every server.

If that was as far as it went, then Nebulon’s storage would have few advantages over external storage arrays. However, each SPU adapter also sports its own network ports, which link it via a network fabric to other SPUs in other server nodes, plus a separate connection for the management network. A bunch of SPUs can be connected together into a logical grouping by the management layer to form a shared storage pool or data domain, which Nebulon calls an nPod.

An SPU is implemented as a full-length double-width PCI-Express 3.0 adapter, and is based on a eight-core Arm system-on-chip (SoC) running at 3 GHz plus a dedicated accelerator chip to handle encryption services. Each card has two 25 Gb/sec Ethernet ports for the data fabric to link with other SPUs, and a further 1 Gb/sec port for the separate management fabric that links with the Nebulon ON control plane. The card itself appears as a SAS host bus adapter (HBA) to the system, avoiding the need for special drivers, according to Nebulon.

Up to 32 SPUs can be linked in a single nPod or data sharing domain, and each SPU card can control up to 24 SSDs. With SSD capacities up to 4 TB supported at launch, each nPod can scale to encompass petabytes of capacity. Currently, an nPod cannot extend any further than a single site, but this may be augmented with support for stretched clusters at a later date, according to Nebulon.

The advantage of this arrangement, according to Nebulon, is that each SPU adapter is largely independent from its host server – it does not rely on the host for network access, so if the host should crash for some reason, the SPU will continue to serve up storage so long as the system is still drawing power. Likewise, the SPU does not use up any CPU cycles or system memory to provide storage services, meaning that the whole of the host processing power is dedicated to running applications. Another benefit, according to Nazari, is that the entire solution ends up costing about half of what a comparable deployment with an enterprise storage array would cost.

“It is primarily because you’re leveraging commercial, less costly server SSDs that are shipped by the server vendors essentially by the millions, as opposed to the special SSDs that storage vendors are shipping in their systems,” he explains.

Nebulon is able to take advantage of those less costly server SSDs shipped by server vendors because that is going to be the company’s route to market. It has already signed an agreement with HPE and Supermicro, which will offer Nebulon SPUs as an option fitted into some of their server models at build time, and a third vendor has apparently joined these but is yet to be disclosed. The server vendors also act as the point of contact for customer support, according to Nazari.

“In the same way that the customers are asking for fewer components in their datacenter, they’re also asking for review fewer vendors to deal with, and this allows the customer to essentially not deal with us as a vendor, they just buy it from the server OEMs they have contractual agreements, service agreements, all those things just go through the go through the existing agreements that the customers have with the OEMs,” he says.

Another notable aspect of Nebulon’s architecture is that the control plane is separated out from the SPU hardware and is delivered as a cloud-hosted service known as Nebulon ON, while the SPU runs a lightweight storage OS called nebOS. This can perhaps be thought of as analogous to the way that software-defined networks separate out the control plane from the data plane to deliver centralized policy-based control over a whole network, rather than embedding a controller into every physical switch.

“If you look at a traditional storage software stack, about 25 percent of that software stack is a very optimized, small piece of software that’s driven around the whole context of storage IO, says Martin Cooper, Nebulon’s Senior Director of Solutions Engineering.

“So writing data, reading data, deduplication, data compression, encryption, data replication, snapshots, all that IO stuff is about 25 percent of a software stack, while 75 percent of it is management reporting and orchestration, and the interesting bit is that when you split that relationship, you can deliver all the management and orchestration stuff as a service from a cloud,” he says.

Nebulon claims that those storage plane features running on the SPU cards, such as data compression and snapshots, tend not to change very frequently, although the firmware can be updated from the cloud-based console. In contrast, the management and orchestration stuff is updated regularly, and so it makes sense to implement this as SaaS so that all Nebulon customers will automatically get new features and updates as they are rolled out.

Having a cloud-based control plane for something as mission-critical as your storage systems may be a cause for concern for many enterprises, but Nebulon ON is hosted on both Amazon’s AWS and Google’s Cloud Platform and is geographically distributed to reduce the risk of it being offline due to an outage at a cloud datacenter. Even in the event of such an outage, the storage plane running on the SPU hardware will continue to function normally, although it would prevent the customer from changing the configuration of the nPods or provisioning new storage.

Security may be another concern, but Nebulon supports role-based access control and operates what it refers to as a security triangle between the user, the cloud service and the target SPU, according to Cooper. This boils down to Nebulon ON checking whether the user trying to access the management console is behind the same firewall or the same network as the systems they are managing, which Cooper claims is no less secure than delivering the same service from an on premises management appliance.

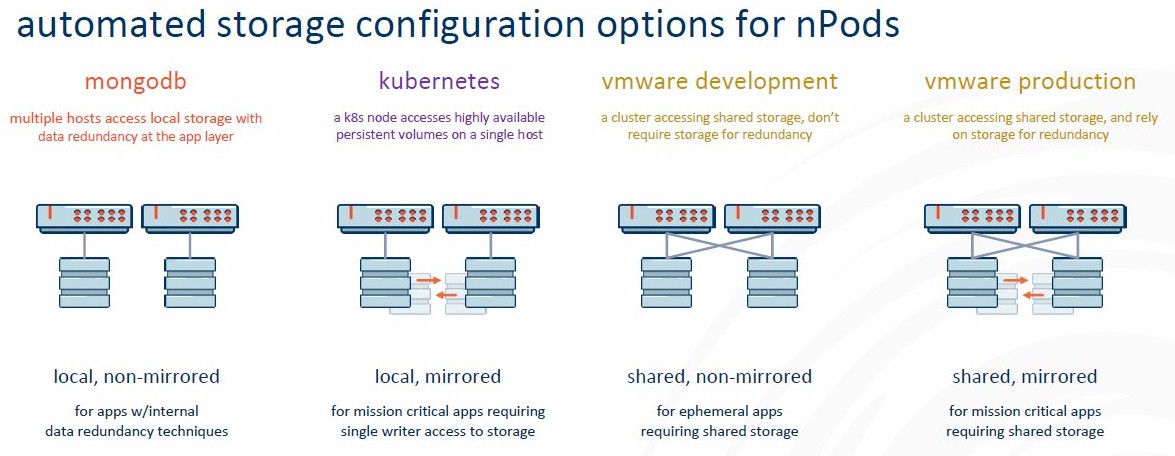

To simplify management and provisioning of storage nPods, Nebulon uses workload-aware templates, which the company describes as being akin to recipes that define what the configuration should look like for a specific application or service, whether that is to deliver an nPod cluster to support MongoDB, Kubernetes, or a VMware deployment.

“If you think about all the basic primitives that you want to define as an application service, what size are the LUNs? What’s the snapshotting schedule that I want to run on those LUNs? What’s the retention policy that I want to have? What kind of application is it, therefore what kind of data layout do I need to achieve high availability? Do I have a boot volume, if I have a boot volume, what’s the operating system that I want to put into that boot volume? All that information comes into what we call a template,” explains Cooper.

When Cloud-Defined Storage becomes generally available, Nebulon intends that the platform will ship with a bunch of predefined application templates to allow customers to get started, while more are in the pipeline and enterprise IT departments will also be able to define their own to suit their own purposes. In addition, configurations will be constantly optimized through the use of telemetry on system metrics fed back to Nebulon for analysis.

Nebulon envisions that the templates will make it easier for organizations to enable self-service provisioning to their workers, many of whom will have little idea about high availability or snapshots.

In addition, Nebulon ON supports API-driven automation, enabling enterprise customers to build configuration scripts or connect it with other management tools. The company said it will have a set of SDKs available for this at launch. General availability of Nebulon’s Cloud-Defined Storage is slated for September.

Be the first to comment