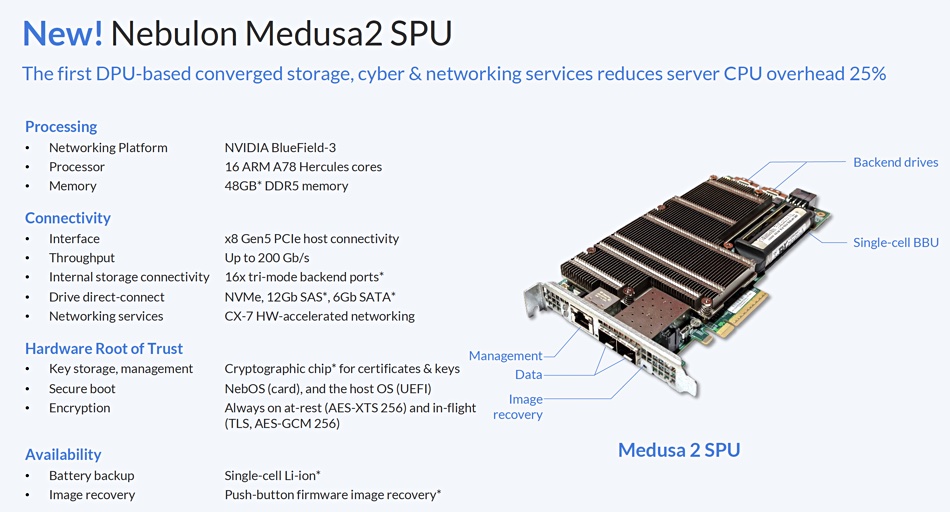

Nebulon has ported its niche server-management card functionality to an OEM Nvidia BlueField 3-based data processing unit (DPU) and so become a fully-fledged DPU supplier – offloading security, networking, storage and management functions from server host CPUs.

In its first, Medusa1, iteration, Nebulon’s SPU (Storage Processing Unit) is a PCIe 3-attached and cloud-managed server card, sporting an Arm processor and software that provides infrastructure management for the host server – looking after its security with a secure enclave and virtualizing locally attached storage drives into a SAN. The SPU is managed by Nebulon ON, a SaaS offering with four aspects: smartEdge to manage edge sites as a fleet; smartIaaS; smartCore to turn a VMware infrastructure into a hyperscale private cloud; and smartDefense to protect against ransomware. Medusa2 goes much further – adding general security, networking and storage functions.

Nebulon CEO Siamak Nazari explained in a statement: “Enterprises have been demanding a hybrid cloud operating model that more closely resembles the hyperscalers, but have been faced with subpar options at best. With our new Medusa2 SPU, we help our customers get one step closer to achieving this goal, and can deliver it in a secure, unified package.”

The claim is that, with Medusa2, this is the first time enterprises and service providers can unify enterprise data services, cyber and network services, and server lights-out management integration, all on a single DPU. It is said to eliminate the server CPU overhead and cyber security risk associated with hyperconverged infrastructure (HCI) software, effectively freeing up more processor cores and equating to a 25 percent reduction in software licensing costs, datacenter space and power consumption.

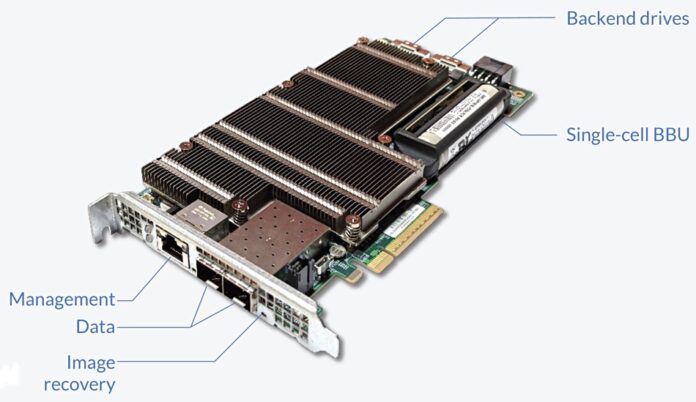

The Medusa2 card is based on BlueField-3 technology but is not an off-the-shelf BlueField card. It is a system-on-chip (SoC) device with 400Gbit/sec bandwidth, 48GB of DDR5 memory, and 8 lanes of PCIe 5 connectivity. COO Craig Nunes told us: “we can can connect into 16 internal NVMe, SAS or SATA drives.”

The card also has a hardware root-of-trust feature. Nunes said: “There’s actually a chip on the card that stores certificates and keys so that we can make sure that only trusted, authenticated individuals can get access.”

Storage, network, and cyber services, along with server management, are completely offloaded from the host server. The host system does not require any additional drivers or software agents to be installed, as the Medusa2 card is host OS and application-agnostic. The vSphere hypervisor is not running on the card.

The Nebulon ON cloud-based control plane provides fleet-wide monitoring, global firmware updates, zero-trust authentication and an invisible quorum witness for 2-node high-availability systems.

Nunes said: “We support all the VMware integrations that you’re familiar with: Windows Server and Hyper V integrations around clustering. We run a CSI driver for Kubernetes, for your Linux environments, doing OpenShift.” This means, he said, that it is a plug-and-play card for enterprises.

Competition

The whole point of using DPUs is to get extra server application code performance by offloading low-level security, networking and security stuff to a DPU card. The cost of the card has to be weighed against the extra application run time performance. If 75 servers with DPUs fitted can do the work of 100 with no DPUs then a customer could retire 25 redundant servers, saving on power and software costs – partcularly with core-based software licensing. Or they could have 25 percent more application processing, which could generate more business. Nebulon claims that fitting its Medusa2 DPU to a server enables it to support up to 33 percent more application workloads.

DPU benefits become more visible as server fleet numbers grow – which is why the CSP hyperscalers, with tens of thousands of servers, are prominent DPU users. Other hyperscalers will be prominent prospects for DPU sellers, and there are effectively just three of them: AMD, Intel and Nebulon.

Intel’s Mount Evans IPU (Infrastructure Processing Unit, which is Intel’s preferred term for a DPU) has 16 Arm cores, like BlueField-3. This is shipping to Google Cloud and other customers.

Intel also has a Xeon-D-based Oak Springs Canyon IPU with a PCIe 4 interconnect.

AMD bought the Pensando DPU startup for $1.9 billion in August 2022 and is selling DSC-200 Pensando-based technology for software-defined networking, and general DPU use.

Microsoft bought DPU startup Fungible in January this year, indicating that its Azure cloud will be using Fungible DPU tech in its hyperscale datacenters. With AWS having its in-house Nitro DPU technology and Google Cloud using Intel DPU technology, that means the three big CSPs are pretty much closed off from Nebulon.

Nebulon has existing relationships with Dell, HPE, Lenovo and Supermicro through which it supplies its Medusa1 SPUs to customers – “dozens of customers, large and small,” according to Nunes. It will be hoping to use these channels for its Medusa2 card, and possibly rely on its lights-out server management technology to make the difference between itself, AMD and Intel. It also has relationships with global distributor TD SYNNEX and Unicom Engineering for system integration activities.